The South by Southwest (SXSW) 2023, held in March this year, brought together in Austin, Texas, companies and creators from a wide range of industries to celebrate world-leading technologies, music, and film.

Dentsu Inc. and Dentsu Creative X Inc. participated in the interactive section of the Creative Industries Expo, exhibiting three product prototypes that offer completely new experiences based on the concept of yet unnamed sensations. Here we look at how technologies were creatively combined to enhance three of our senses—touch, taste, and smell.

Product 1: Phantom Snack / Munching without eating

Phantom Snack is a new virtual eating system. We interviewed Kou Izumi from Dentsu’s project team and asked him how people can have the sensation of munching on a snack without actually eating anything.

A craving to chew

SXSW 2023 report editor: Please tell us about Phantom Snack.

Kou Izumi: The snack offers a completely new and guilt-free way to experience eating. It lets you sense the texture and taste of, for example, a crisp, crunchy snack without putting anything in your mouth. There is no calorie intake at all because you don’t eat anything. Actually, the more you chew, the more calories you burn.

The idea for this originated with my own experience. I had a habit of eating snacks late at night. But, rather than snacking because I was hungry, I just wanted to have something to munch on. I thought that if I could find a way to eat without adding calories—when the body isn’t craving calories, but just has a desire to munch on something—I could help a lot of people who, like me, worry about snacking too much. That’s what motivated me.

I imagined a way of doing this around 20 years ago, but I never had an opportunity to develop it. Had someone used a similar method to create such a product, I would have bought one. No such product ever appeared, of course, so when the company solicited ideas for product development, I submitted my idea and made it happen.

Editor: How did you assemble a team for SXSW?

Izumi: We put together a team by recruiting members from Dentsu, Dentsu Creative X, Dentsu Digital, and a few of our partner companies. We have a creative director in charge of planning, coding, hardware creation, development, and production; a technologist responsible for naming, planning, and engineering of the visual system; and an art director in charge of the booth’s user experience, design, logos, and animation.

Other team members were responsible for the AI development of the facial recognition system; the design and production of the booth; the creation of motion graphics; the audio production, signage and art; and promotional video direction. We also worked with a research institute to study scientific findings related to chewing and the brain.

Three of us managed the booth and talked with visitors at the expo. None of use had attended SXSW before, and were not used to speaking English. Therefore, we felt nervous on the opening day of the four-day event, but got used to many things over that brief period.

Because each day we reviewed what we had done and made improvements, I think we were able to interact quite smoothly with visitors toward the end of the exhibition.

Facial recognition + bone conduction headphones

Editor: What technologies were used for this new system?

Izumi: When you go through the motions of chewing without having anything in your mouth, the bone conduction headphones allow you to hear chomping sounds synchronized with the movement of your mouth and jaw. This moving is detected by facial recognition sensors.

We also use visual images and an aroma diffuser to enhance the chewing experience, so it really does feel as though you are chewing a snack. We prepared chocolate chip cookies, potato chips, a salad, and a completely original item we called Phantom Sweets in order to offer several virtual eating experiences to booth visitors.

Editor: What difficulties did you have when trying to make your idea a reality?

Izumi: Although our theory was sound, we could not be certain it would work until we put it into practice. At the same time, some things such as production costs were still unclear. Therefore, we created a prototype before submitting our project proposal to the company.

By trial and error, we devised ways of detecting the chewing movements of the jaw when the mouth is closed. Our calculations were relatively simple at first. But, to process facial features obtained from the facial recognition system to make the calculations possible, we explored how this could be done using coding.

We had had almost no similar cases in the past to learn from, so developing the codes was really tough. Then, when we were close to having solved this problem, we shifted the system from a rule-based approach to machine learning for greater precision.

For the system to work at SXSW, it had to process the faces and chin motions of people with various racial backgrounds. To prepare for this, we asked many members of the Group to provide data samples.

Wishing to create a pleasant experience for users, we paid particular attention to the audio and motion graphics, which are our areas of expertise. With the assistance of professionals who have strong backgrounds in creative advertising production, we achieved quality levels on a par with entertainment standards.

Editor: What was the response at the exhibition space?

Izumi: From the initial stage of development, reactions were favorable among our team and other colleagues in Japan. But I was concerned about how this user experience would be received overseas. Thankfully, people enjoyed it a lot because it is an unusual experience.

Many people laughed like crazy while trying it, and the ones who, like me, tend to eat too many snacks said they wanted to have the system. We also attracted interest from people involved in sustainability issues and researchers in related fields.

Our booth always had visitors lining up to get in, and more than 1,000 people tried Phantom Snack. I believe we met the expectations of those who came to SXSW to experience new and surprising things. More than anything, having so many people experience what we had designed and developed, and then seeing their reactions, was really valuable and gratifying.

Facial exercise to preventing dementia

Editor: What possibilities do you see for this technology?

Izumi: The act of chewing itself has been reported to benefit health and well-being. It seems to improve concentration and alleviate stress. It also may have other beneficial effects, such as helping prevent dementia.

More research is needed to determine what outcomes Phantom Snack can produce. It could make significant contributions internationally, so I hope to develop it further in collaboration with academic research labs. It could also make hospital meals more pleasant, as patients receiving liquid food could experience the sensation of chewing on solid food. We haven’t tested it in such settings, but Phantom Snack it may have potential there.

In addition, I believe virtual eating can soon be used for entertainment-related applications and as a means of reducing calorie intake. On its own, Phantom Snack can be enjoyed as a next-generation indulgence. It can also be used when actually eating something to enhance the taste. For example, food cooked without oil can be made to taste crisper. If it is combined with virtual reality, entertaining and healthy ways of eating can be created in virtual spaces. Conventional VR goggles can be used for visuals since they do not cover the user’s mouth. Phantom Snack can also be used as a controller for mouth and jaw movement in facial exercises. There are many other ways of using the technology.

I have heard that people have been steadily chewing less and less over time. If Phantom Snack could contribute to better health by helping to reverse the trend, that would be just fantastic.

Editor: Finally, what advice would you give exhibitors and people planning to attend SXSW in the future?

Izumi: We exhibited in the Creative Industries Expo, but the XR Experience exhibition seemed to have an edge. For people going in the future, checking out everything that SXSW has to offer may be the best way to follow and experience the latest trends.

For exhibitors, it is important to conduct test runs of final deliverables in the same way one would develop a general service, so I recommend integrating tests and repeatedly making improvements. Our development of Phantom Snack fell behind schedule, but we were able to improve it several times and make modifications. I believe that contributed to the success of our exhibit.

Being able to surprise visitors who expect new experiences is really exciting, so I hope many creators will have the chance to exhibit there in the future.

Product 2: Hugtics / Let’s you hug yourself

Hugtics is a technology that allows a user to experience hugging with a specially designed vest and mannequin. We interviewed Atsushi Otaki from Dentsu’s project team and asked him how it is possible to hug oneself.

Proposing a new way to make life happier

SXSW 2023 report editor: Please tell us about Hugtics.

Atsushi Otaki: The name comes from a combination of the words hug and haptics, a technology that virtually reproduces our experience of touch. Hugging is an action that raises happiness levels in human beings, so we are aiming to reinvent the experience of hugging and offer solutions for various issues confronting society today, such as mental health concerns, through the power of this technology. We demonstrated it at South by Southwest 2023 as our first step toward offering people an all-new experience of hugging themselves as well as the experience of remotely receiving a hug from another person.

People’s average life expectancy has been increasing around the world, thanks to advances in medical care. At the same time, however, a growing number of people are suffering from mental health problems. When we were thinking of a new project proposal, we wondered how Dentsu could address this issue and help people lead happier lives. Hugtics was the result of that process.

Editor: How did you assemble a team for SXSW?

Atsushi Otaki: Among the project team members, those who work on the Dentsu Motion Data Lab platform were responsible for all of the planning and production. A great deal of motion data has been collected by the platform from around the world to help solutions to be devised to address issues related to sports, preventative medicine, and the preservation of traditional culture.

Nobuhiro Takahashi, a researcher of The University of Electro-Communications, participated as a partner in charge of developing Sense-Roid, a tactile communication device that served as the core technology for the project. Pyramid Film Quadra Inc., a company specializing in digital communication, produced the vest as well as videos and websites, while Dentsu ScienceJam Inc. was responsible for measuring brain waves.

A project proposal can end up pie in the sky if discussions go on endlessly. Since we needed to demonstrate the technology interactively with users, we ran experiments early on to identify any problems, and placed importance on making improvements as we developed the project.

Measuring and reproducing a hug

Editor: What technologies were used to create this new way of hugging?

Atsushi Otaki: A vest-shaped device with artificial muscle fibers woven into it was key. When the user hugs a mannequin torso equipped with pressure sensors while wearing this vest, the physical hug is measured and the data is fed back to the artificial muscle fibers, which then reproduce the user’s own hug. This is a completely novel experience.

We used Dentsu ScienceJam’s sensory analyzer to detect the user’s brain waves and measure several emotional changes related to happiness that occur when hugging.

Using a proprietary algorithm, the measurement results are presented visually with colored LED lights attached to the vest, allowing the user to intuitively see the positive effects.

Because the vest and the artificial muscles have been combined, we can closely reproduce the feeling of being hugged by converting the feedback from the artificial muscles into surface pressure. Had we used artificial muscles alone, the pressure of their woven cable structure could be too strong, so we were careful to check the effects that various materials and thicknesses could produce.

Editor: What was the response at the exposition?

Atsushi Otaki: Many of our visitors were really curious about Hugtics. They not only tried it, but also commented on it and asked questions. We engaged in many discussions with them. The responses varied, but were all positive. Some said it was cool, and others laughed, saying it was so crazy, but in a good way.

One visitor wanted to use the technology to record and save his mother’s hug. Another said he felt happy because it reminded him of his late father’s hug. One more expressed a desire to send hugs to orphaned children. It was fascinating how the act of hugging oneself could lead to such responses. I think the comments we received can give us ideas for future development.

We were so busy running our booth that we had almost no time to look around, but visitors and members of other companies told us that Dentsu’s booths attracted the most exhibition visitors.

We also heard that many other booths had good products and services, but didn’t present them effectively, while Dentsu’s ideas were really good and skillfully demonstrated. I was genuinely delighted to receive such feedback. For Dentsu, exhibiting at SXSW is not only a valuable experience, but also a chance to show off what we do so well as professionals—communication.

Replicating hugs of significant others beyond time and space

Editor: What is your outlook for Hugtics and what potential does it have?

Atsushi Otaki: In terms of the technology, we hope to enhance the quality and novelty of the hugging experience. There are a few developmental paths we would like to take.

One is to make the hugs feel more human-like and warm, which could help raise the effectiveness of mental healthcare. Another is to incorporate hugging in entertainment, particularly when it would not otherwise be possible for people to hug each other. That could expand applications in entertainment.

On the business side of things, we want to increase the number of Hugtics users by collaborating with other companies, because the experience of actually using Hugtics cannot be demonstrated very well in promotional videos alone.

While stepping up our collaboration using our current prototype, over the medium to long term we want to develop custom-made Hugtics products tailored to specific needs, and set up Hugtics facilities in a wide range of public places.

Hugtics offers possibilities that go beyond time and space. The hugging data obtained from Hugtics is always stored, so it is possible to create a data platform for hugging. That means the hugging data of a special someone, like a partner or family member, or even a famous person like a pop idol or star athlete, can be stored regardless of the time and place it was obtained, and then reproduced anytime.

We want to use and continue refining the hugging data to provide solutions in various settings, such as providing healthcare, combatting loneliness, as well as improving the Metaverse.

Editor: Finally, what advice would you give exhibitors and people planning to attend SXSW in the future?

Atsushi Otaki: In my view, the wide range of discussions we engaged in and the feedback we received at the venue were the most valuable aspects of exhibiting. We were also able to network with other exhibitors. Anyone interested in SXSW should definitely give it a try, because there they will discover new things.

It also provides a good benchmark with respect to project development. By setting the goal of exhibiting at SXSW, a team can get highly motivated. You cannot take members who lack motivation or confidence to a venue attended by people from all over the world. We had confidence in the quality of our idea because we were selected through an internal company competition. In the process, we were given all kinds of advice, which enabled us to refine our ideas and improve the project.

If you have ideas about which you are passionate, you can realize them and achieve your goals. Anyone thinking about exhibiting should definitely seize the opportunity.

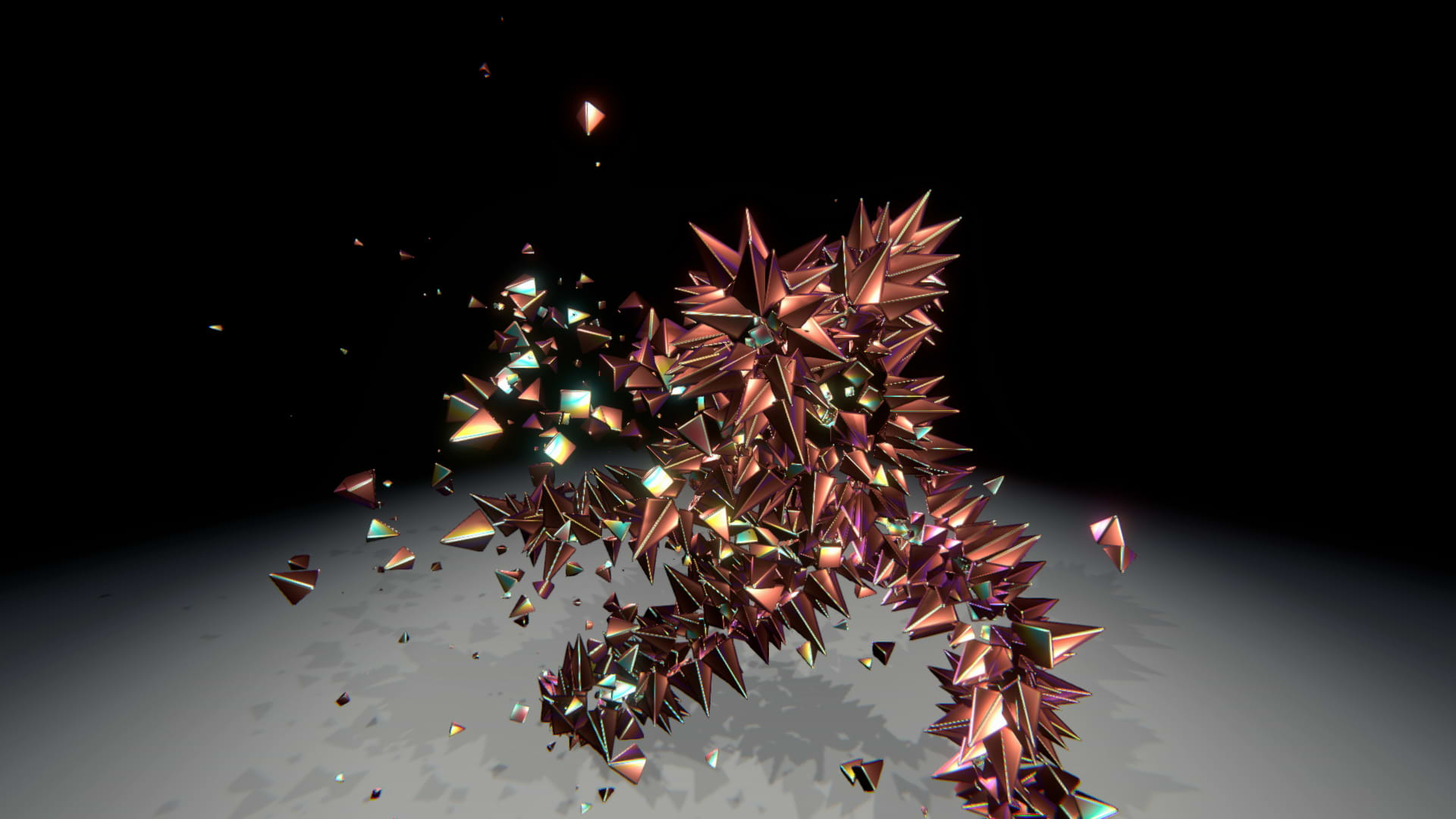

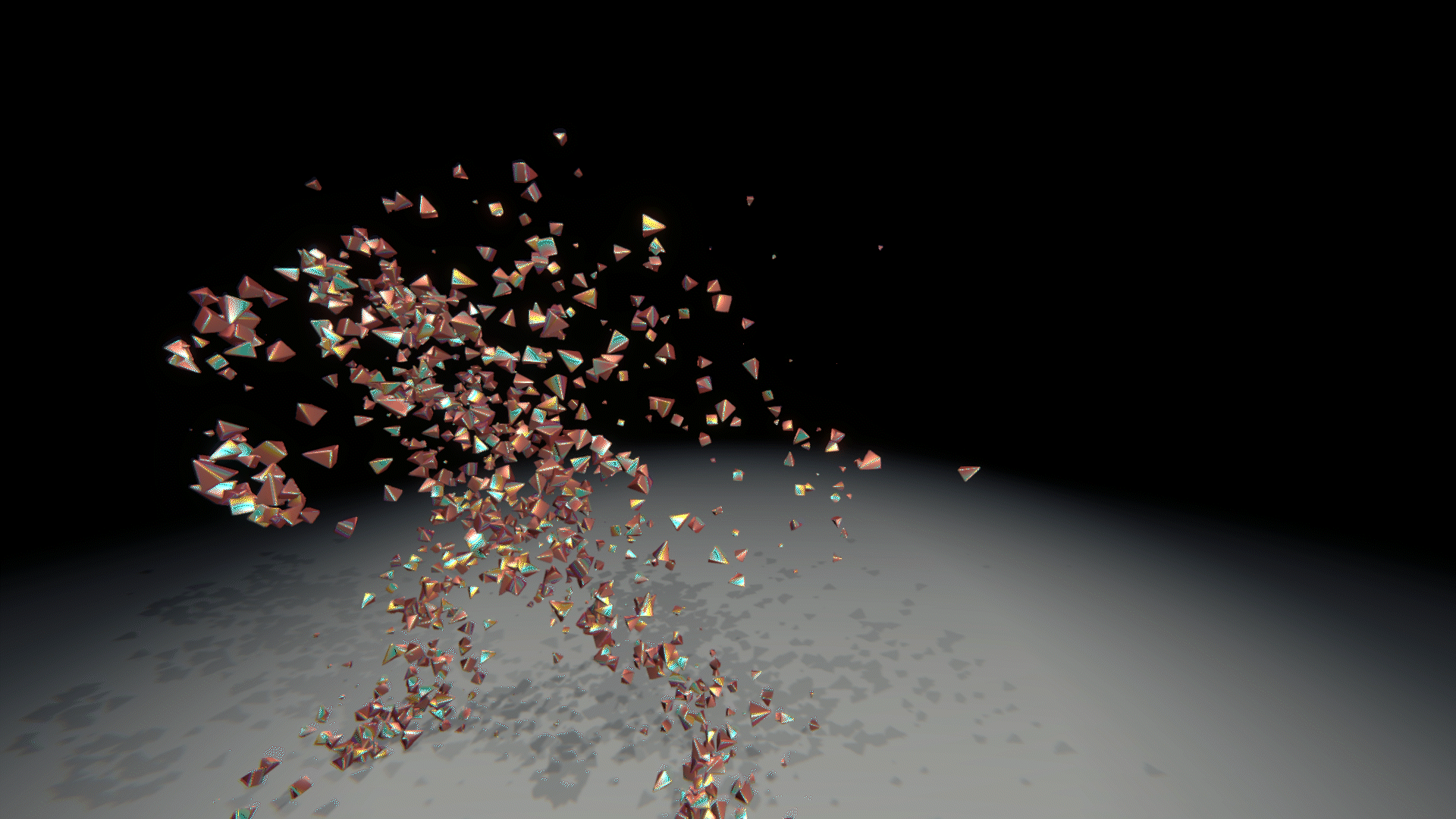

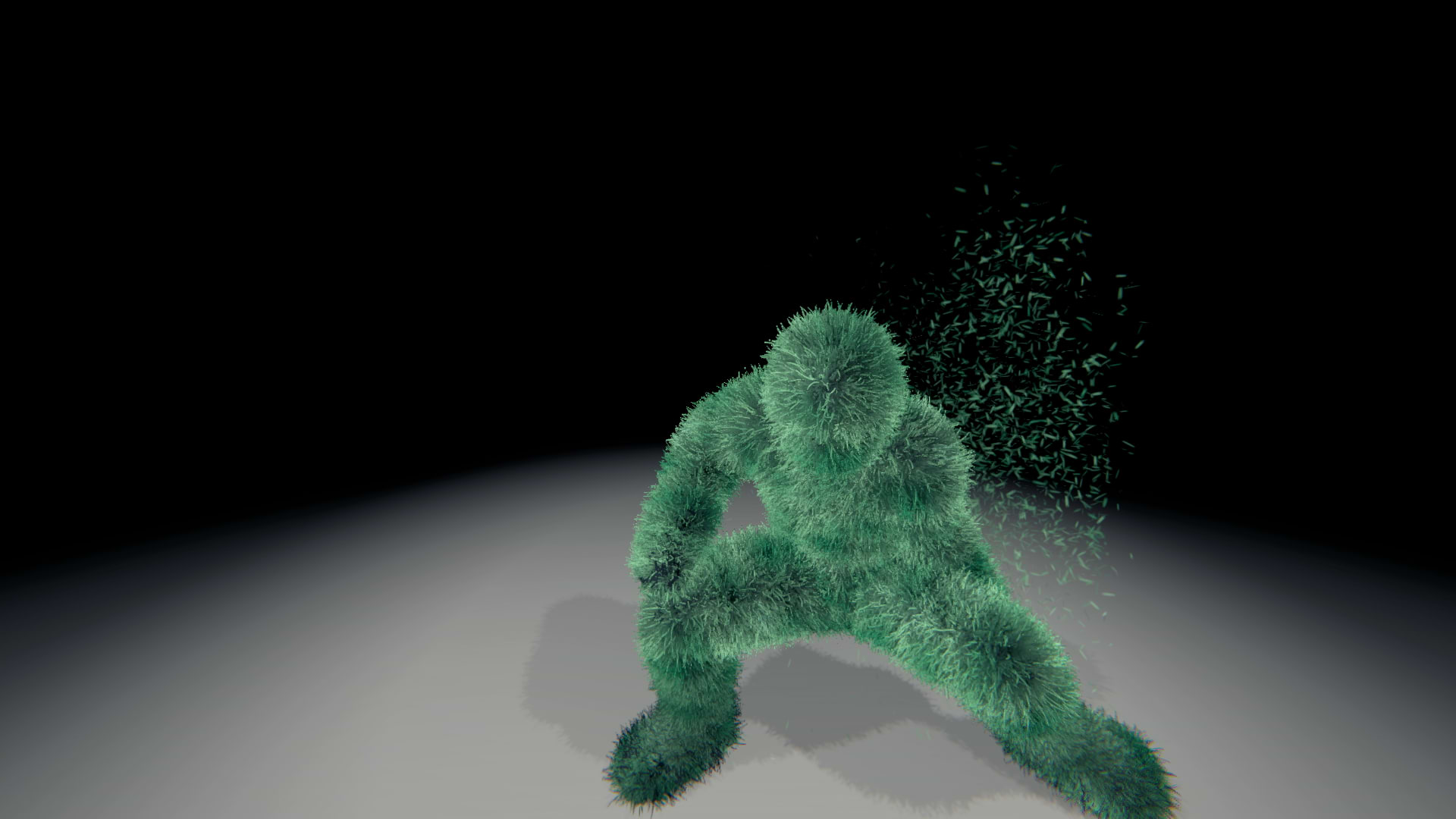

Product 3: Transcentdance / Seeing What We Smell

Transcentdance is an augmented reality (AR) system that converts scent-based data into a dance performance. We interviewed Shota Tanaka, a member of the Dentsu team from Dentsu Creative X Inc., who is involved in the project and asked him how scents can be visually represented.

Using AR to make a scent dance

SXSW report editor: Please tell us about Transcentdance.

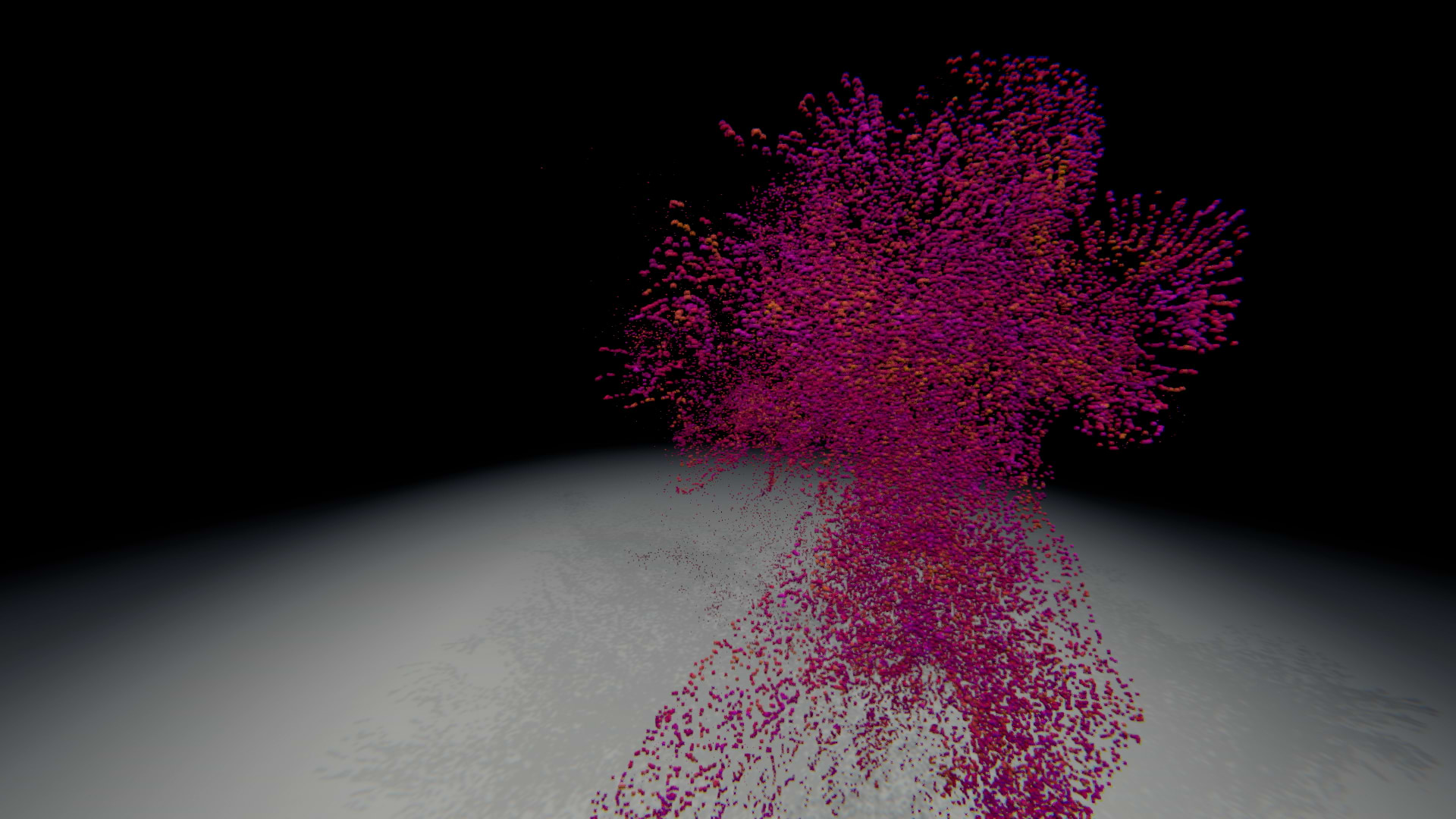

Shota Tanaka: The name is formed from the words transcend, scent, and dance. In a nutshell, the AR system visually transforms a scent into a dance to create enjoyable content. When a user selects a scent, sensors digitize its composition and the data is analyzed using AI. Then AR is deployed to generate a character that represents the scent and have it dance in real time according to the type and composition of the scent.

Creating a visual image was difficult because scent information is transient, so we added temporal and spatial data to generate the motions of dancing.

I had always felt it was really difficult to describe scents in my regular ad production work, so I decided to initiate this project. I wondered if it was possible to express odors and visualize them.

When the company solicited ideas for South by Southwest (SXSW), I took that opportunity to submit a proposal. Olfaction data—or data regarding the sense of smell—has yet to be used in entertainment, so I thought this proposal had potential.

SXSW report editor: How did you assemble a team for SXSW?

Tanaka: We put members of Dentsu Creative X in charge of project planning and production, and members of Dentsu Craft Tokyo in charge of creating the booth. In addition, we worked with sensory evaluators from Revorn Co., Ltd., which supplied the odor sensors. Natsuko Kuroda, a choreographer and dancer, assisted us when we created dance movements for the scents.

From the time we submitted our idea to express scents through dance, it took about 18 months to complete the project. Most of that time was spent formulating the concept, planning, and doing research on the odor sensors. Actually, only about two months was needed to prepare the equipment and materials for the booth.

Collaboration with sensory evaluators and a dancer

Editor: What was the most challenging aspect of turning the idea into reality?

Tanaka: Our sense of smell is transient and extremely difficult to convey in words. Since each person experiences the same odor differently, making a convincing visual representation of a scent was a challenge.

First, in order to convert scents into linguistic information, we narrowed down the number of scents for the dance visuals to six, and had the sensory evaluators allocate more than 50 keywords , such as sweetness and freshness, to represent the strength of each scent on a five-point scale.

Then we had the contemporary dancer express those words and the actual scents through dance movements. In that way, by further expressing the scent represented by linguistic information through dance, we were able to create a system that allows the viewer to freely imagine the scent without being bound by stereotypes.

SXSW report editor: What response did you get at SXSW?

Tanaka: My impression was that many of the visitors to our booth were very interested in the scents. When we set up the system in Japan, I had been worried about whether the visual representations of the scents would be convincing. But when people at the exhibition actually tried Transcentdance, they seemed really keen to understand it.

Many visitors suggested business ideas, offering numerous thoughts on how Transcentdance technology might be applied in businesses with which they were involved.

When we exhibited the AR system at a technology trade show in Japan, we were busy presenting and so hardly had a chance to get any feedback. At SXSW, however, we were able to interact with attendees, which was a nice change.

Technology with creative potential

Editor: What issues are you facing now and what are the future prospects for this technology?

Tanaka: Our first issue is that the range of scents we can represent is too narrow. We have created only six types of dance, but need to have more in the future. Were it possible to mix them, various scents could be expressed.

But besides increasing the types of dances, I want to build a database of dances by, for example, working with multiple dancers. Moreover, by exposing the database to AI, I hope to have dances automatically created.

The second issue is that there are still two few applications in entertainment. Currently, systems that allow people to experience scents with AR are not very impressive, and none make possible interaction with users.

Incorporating a sense of smell in entertainment applications has a long way to go, so we want to break new ground by developing interactive systems. By finding ways to make the sense of smell fun, I think the technology can be applied in various settings, such as conducting research on smell, promoting companies that make use of odors, and exhibiting scents.

Editor: Finally, what advice would you give people planning to exhibit or attend SXSW in the future?

I believe both exhibitors and attendees will find it exciting, as the displays showcase everything, from technology-related prototypes to finished products.

The people at SXSW seemed to really appreciate the creative potential and possibilities of technology, and it was refreshing to see tech given the same status as music and movies. There were many forward-looking people present, and the atmosphere was fantastic. I definitely recommend checking it out.

Related links

Inquiries

Kenta Arai (Dentsu)

https://www.dentsu.co.jp/en/contactus/